Table of contents

- Table of contents

- Completed Tutorial to NVIDIA Jetson AI JetBot Robot Car Project

- Introduction:

- Hardware

- Relevant Software:

- Appendix A: quick reference for Jetson Inference demo

Completed Tutorial to NVIDIA Jetson AI JetBot Robot Car Project

Introduction:

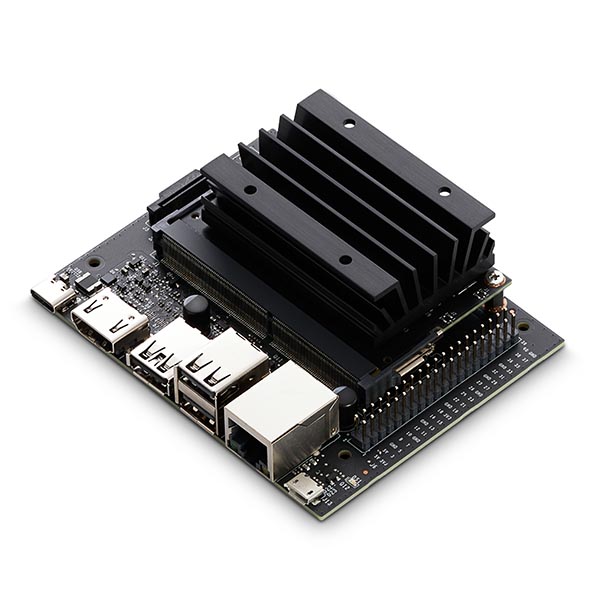

I was first inspired by the Jetson Nano Developer kit that Nvidia has released on March 18th, 2019 (Check out this post, NVIDIA Announces Jetson Nano: $99 Tiny, Yet Mighty NVIDIA CUDA-X AI Computer That Runs All AI Models). As there are more and more supporting resource published, such as JetsonHack channel – I’ve been following for a year. Also, here are many and many Jetson AI project had been created since then, such as JetBot, JetRacer (Check out this link to read more, link). Therefore, I also want to build one for myself as a electronic hobbyist.

Without further ado, let’s start the journey. The following article is everything you need to begin from nothing to fully running JetBot.

Hardware

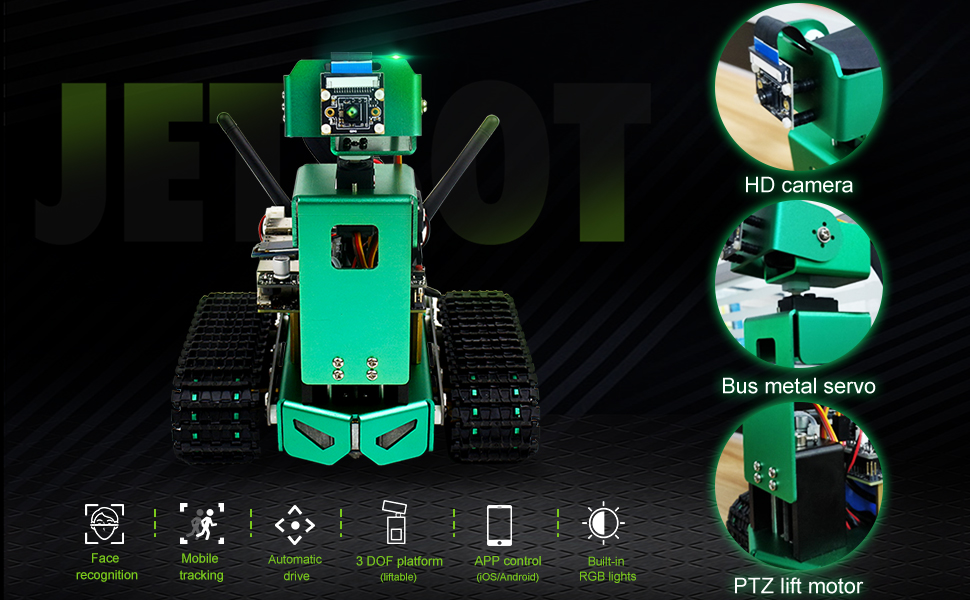

First step, You need to obtain the hardware. If you really want to built one for yourself, you can check out this website, for the Bill of Materials. However, if you are someone like me, only want to focus on the software component and building some intelligent robot, then you can purchase from Theird Party Kit (For me, I purchased from YahBoom at Amazon).

If you also purchased from YahBoom, you can use their website for all the hardware assembling, and they also provided some deep learning module, such as Face recognition, object detection, mobile tracking and more. (But, I figured out, all those code are actually already provided by NVIDIA Jetson Hello World Project, here is their Github repo)

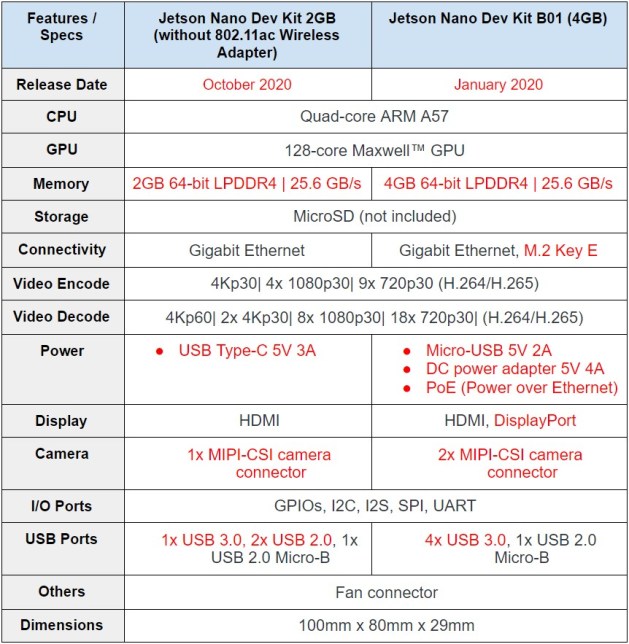

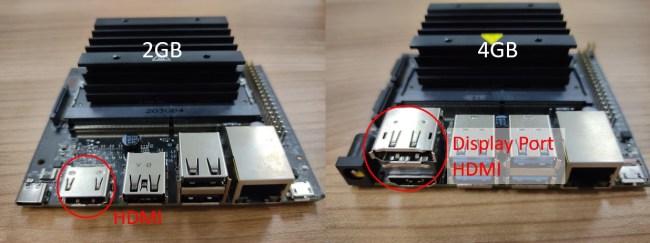

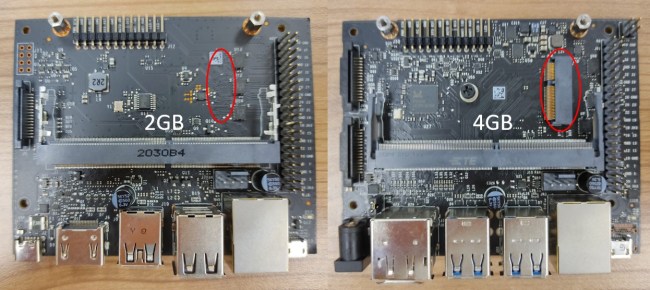

Comparison of Jetson Nano: A02 vs B01 2GB vs B01 4GB

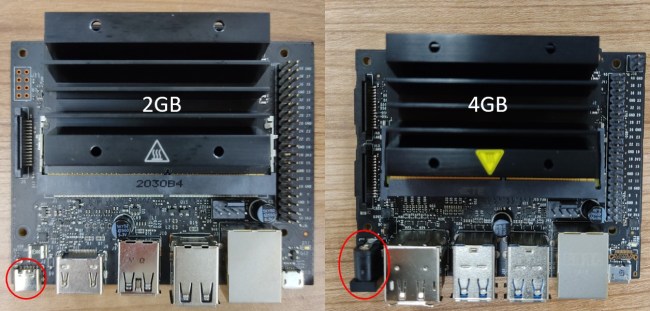

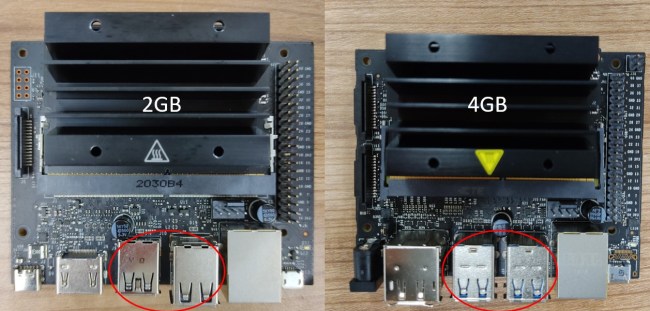

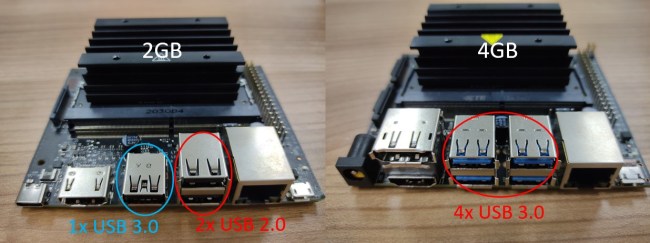

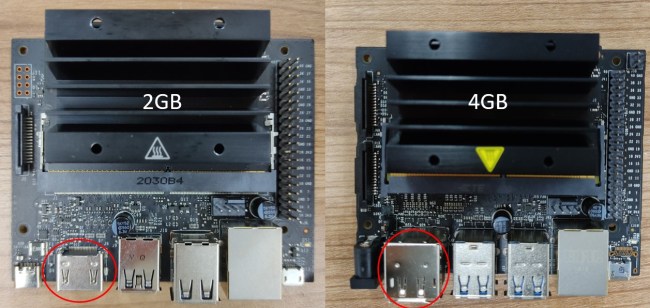

B01: 2GB vs 4GB

Table of comparisons between Nvidia Jetson Nano Developer Kit 2GB and 4GB(B01).

Hardware Changes from 4GB(B01) to 2GB

- Removed camera slot (J49)

- Changed DC barrel jack to USB Type-C port

- Changed the USB Type-A ports

- Removed Display Port

- Removed PoE – Power over Ethernet port (J38)

- Removed M.2 Key E slot (J18)

Compatibility Issues 2GB vs 4GB

- You cannot use the JetPack 4.4 image in the Jetson Nano 2GB. You can only use the provided Ubuntu 18.04 in LXDE desktop environment with Openbox window manager given in Nvidia’s Getting Started with Jetson Nano 2GB Developer Kit Website.

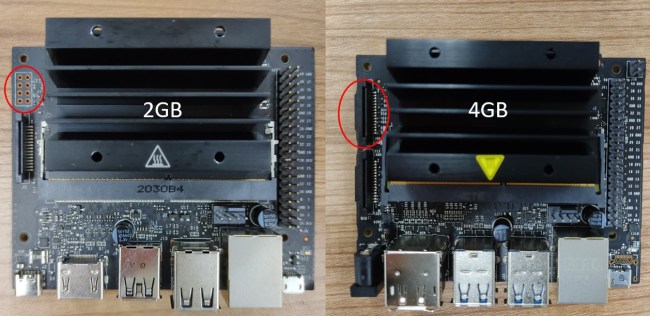

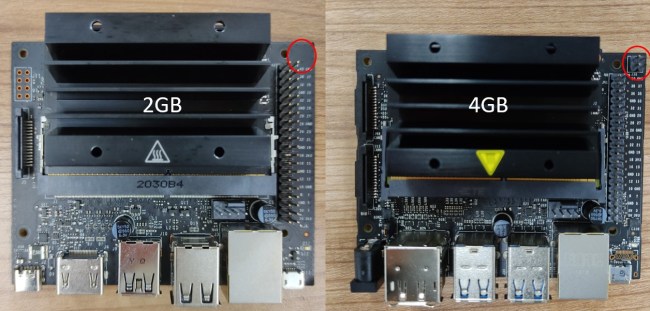

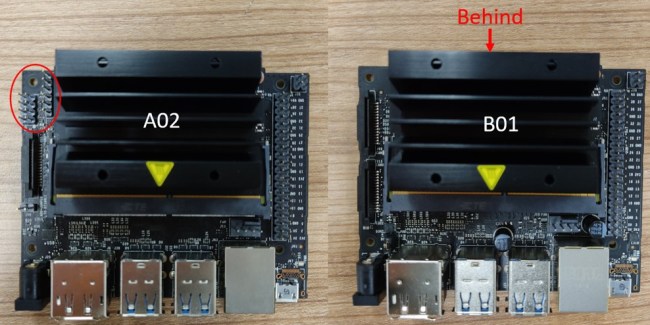

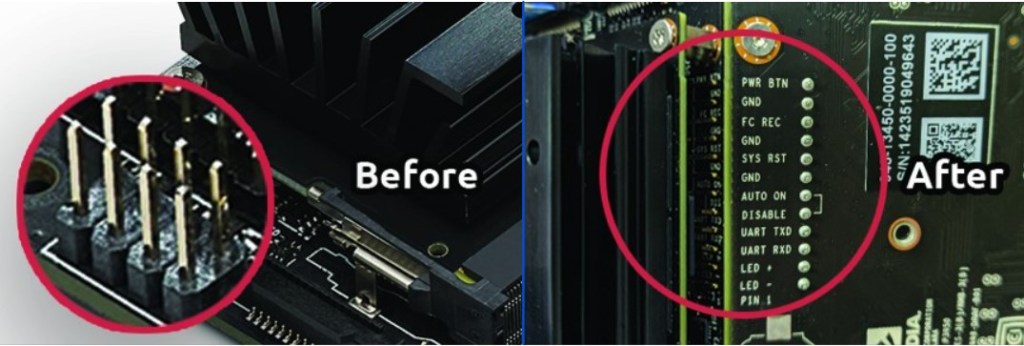

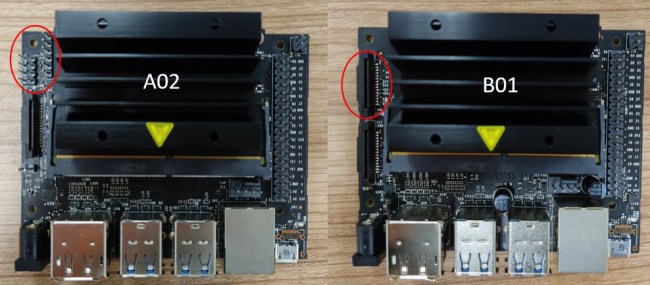

A02 vs B01

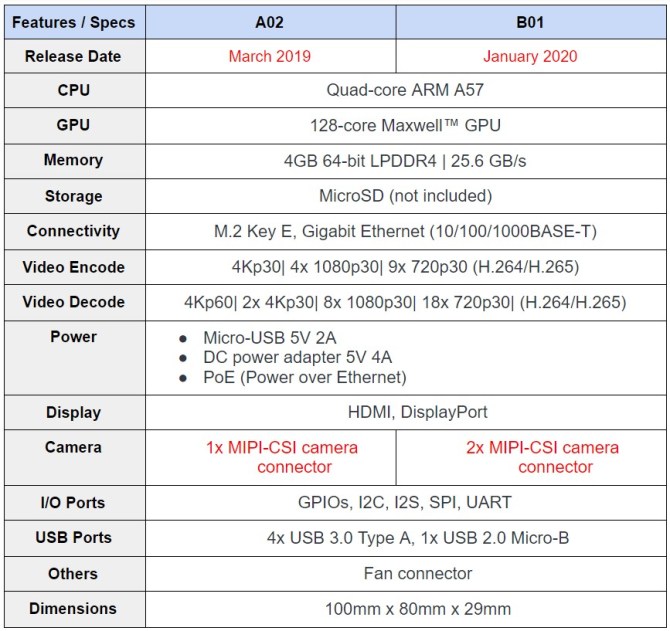

Table of comparisons between Nvidia Jetson Nano Developer Kit A02 and B01.

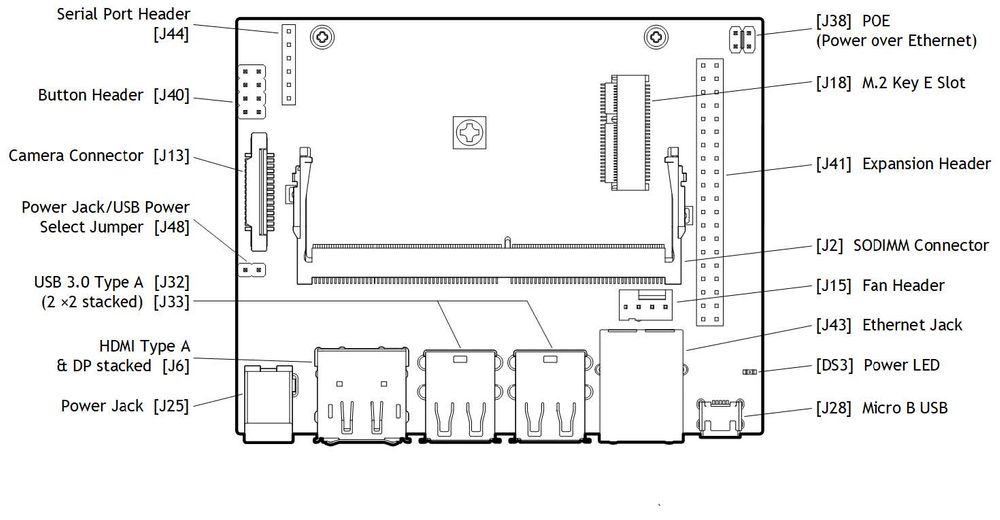

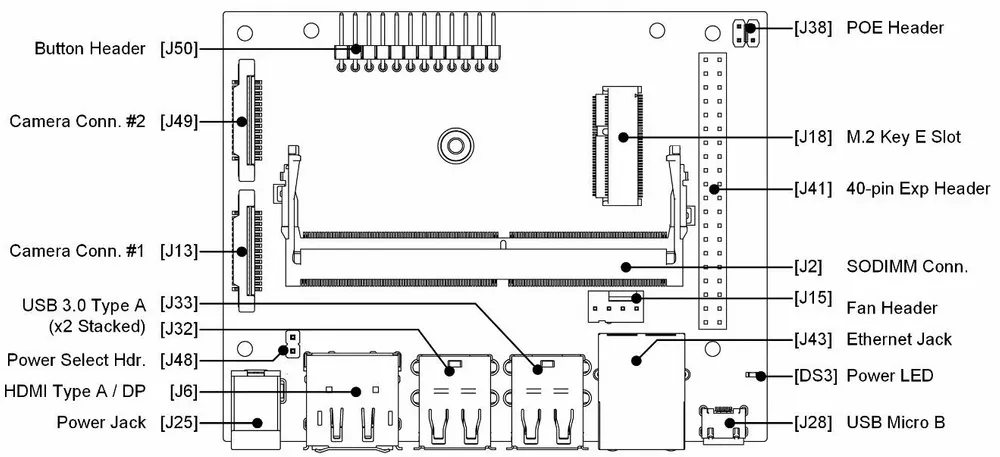

Figure 2: Jetson Nano A02 Pinout (left), Jetson Nano B01 Pinout (right)

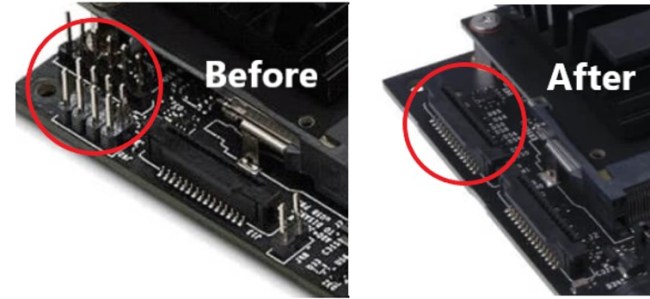

Hardware Changes from A02 to B01

- Removed Serial Port Header (J44)

- Changed the button header (J40) under the module

- Changed the position of Power Select Header (J48)

- Added a camera slot (J13)

Compatibility Issues A02 vs B01

- The hardware of Jetson Nano Developer Kit A02 cannot boot with Intel 8260 WiFi plugged in. This issue is fixed in the newer B01 version of the hardware. Refer to release notes.

- B0x images will work on A02 boards without issue. There are some pinmux differences between the two hardware revisions, but the B0x pinmux settings will work fine on an A02 board. However, A0x pinmux (dtb) does not work on B0x board.

The CoM modules and carrier boards of the older (A02) and newer models (B01) are not interchangeable. That means you can not plug the A02 CoM module into the B01 carrier board, and vice versa. You need to upgrade the whole developer kit for an update.

Other Equipment

Besides the Jetson Nano Developer Kit you’ll also need a microSD card, a power Supply (5V 2A), and an ethernet cable or WiFi adapter.

microSD card

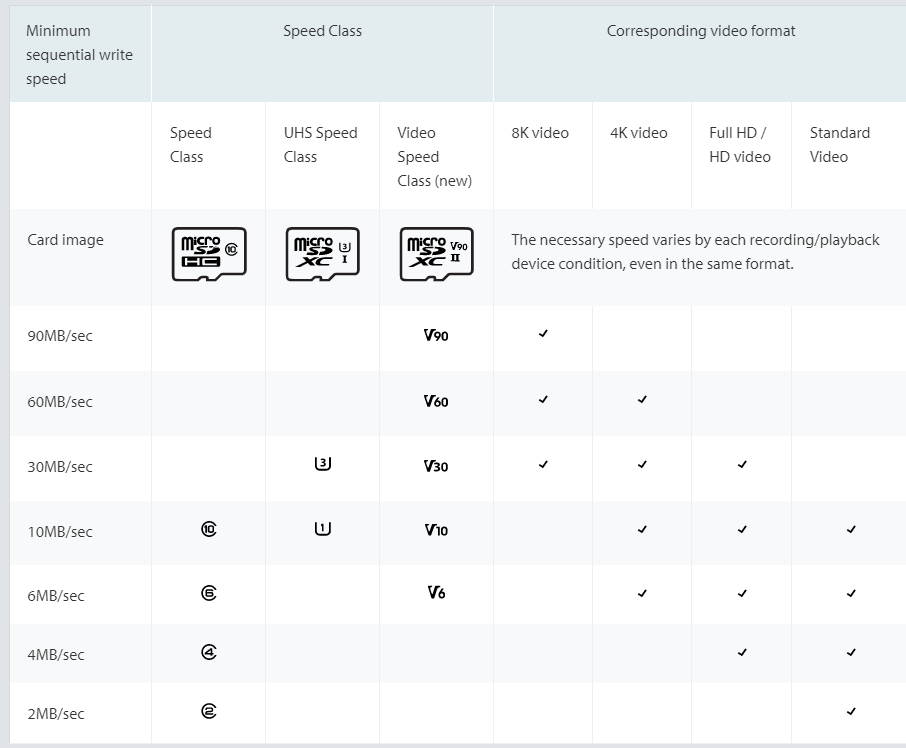

The Jetson Nano uses a microSD card as a boot device and primary storage. The minimum size for the microSD card is 16GB, but I would strongly recommend getting at least 32GB, or 64GB. It’s also essential to get a fast microSD as this will make working on the Jetson Nano a lot more fluent.

There are many different standard for microSD card – If you want to read more, check out these two article: A Guide to Speed Classes for SD and microSD Cards, and Fastest SD Card Speed Tests. For this project, you can get this one, SanDisk 64GB Extreme microSDXC UHS-I Memory Card with Adapter - Up to 160MB/s, C10, U3, V30, 4K, A2, Micro SD.

Power Supply

The Jetson Nano can be powered in three different ways: over USB Micro-B, Barrel Jack connector, or through the GPIO Header.

To power the Jetson Nano over USB Micro-B, the power supply needs to supply 5V 2A. Not every power supply is capable of providing this. NVIDIA specifically recommends a 5V 2.5A power supply from Adafruit, but I use a Raspberry Pi power supply, and it works just fine.

If you want to get the full performance out of the Jetson Nano, I’d recommend using the Barrel Jack instead of powering over USB because you can supply 5V 4A over the Barrel Jack.

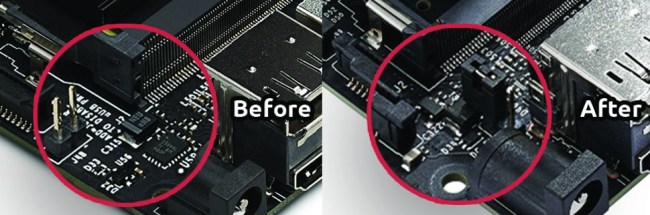

Before connecting the Barrel Jack, you need to place a jumper on J48. The power jumper location can vary depending on if you have the older A02 model or the newer B01 model.

Camera:

Here is a list of camera that work for Jetson Nano, list, but I will suggest two to purchase, 1) Raspberry Pi Camera Module v2, 2) IMX219-160 8-Megapixels Camera Module 3280 × 2464 Resolution. If you lighting as well get this one, IMX219-77IR 8-Megapixels Infrared Night Vision IR Camera Module 3280 × 2464 Resolution with IMX219 Sensor

Note: If you don’t get the camera right, you might get into some troble when running their Deep Learning code, check this article first, Camera Streaming and Multimedia

Fan

Get this, Noctua NF-A4x10 5V, Premium Quiet Fan, 3-Pin, 5V Version, if you don’t have one

Ethernet cable or WiFi Adapter

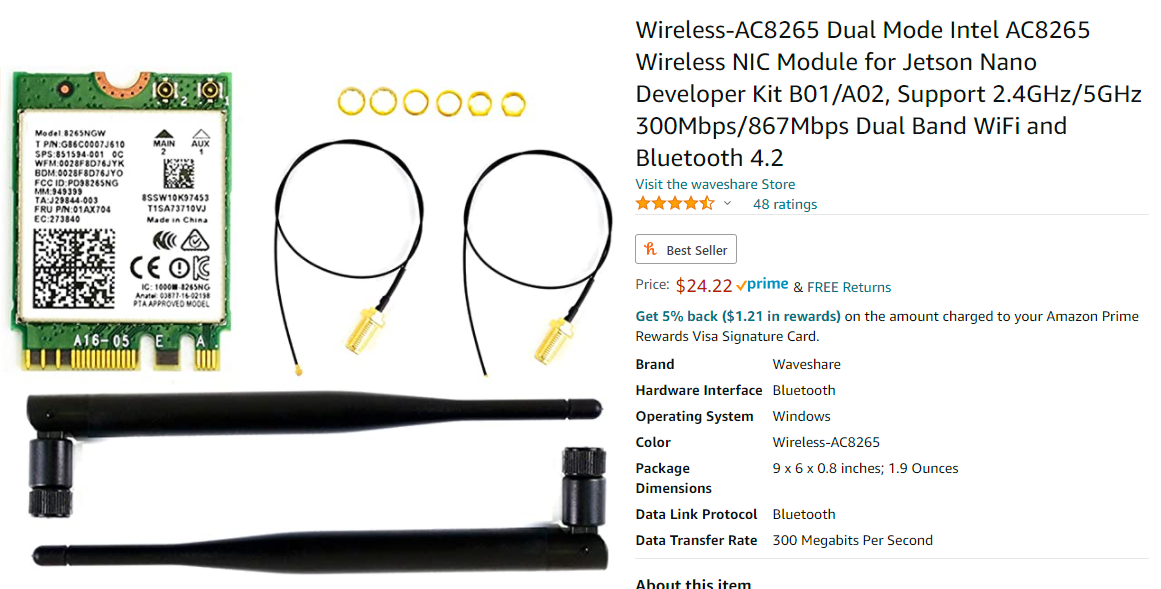

Lastly, you’ll need an ethernet cable or a WiFi Adapter since the Jetson Nano doesn’t come with one. For the WiFi Adapter, you can either use one that connects through USB, or you can use a PCIe WiFi Card like the Intel® Dual Band Wireless-AC 8265.

For me, I have this one, Wireless-AC8265 Dual Mode Intel AC8265 Wireless NIC Module for Jetson Nano Developer Kit B01/A02, Support 2.4GHz/5GHz 300Mbps/867Mbps Dual Band WiFi and Bluetooth 4.2

Reference:

- Jetson Nano B01 vs A02: What’s New for the Compute on Module (CoM) and Carrier Board, https://www.arducam.com/nvidia-jetson-nano-b01-update-dual-camera/

- Nvidia Jetson Nano Developer Kit A02 vs B01 vs 2GB, https://tutorial.cytron.io/2020/10/14/nvidia-jetson-nano-developer-kit-a02-vs-b01-vs-2gb/

Setup

Note: you need to download some software for this balenaEtcher – For Flash OS image, and SD Card Formatter – For formating SD Memory Card. Also, instead of using the following SD Card Image provided by Nvida, use this one with Deep learning module integrated ( Yahboom_jetbot_64GB, and 32GB).

==> the following article can be outdated

Before we can get started setting up a Python environment and running some deep learning demos, we have to download the Jetson Nano Developer Kit SD Card Image and flash it to the microSD card.

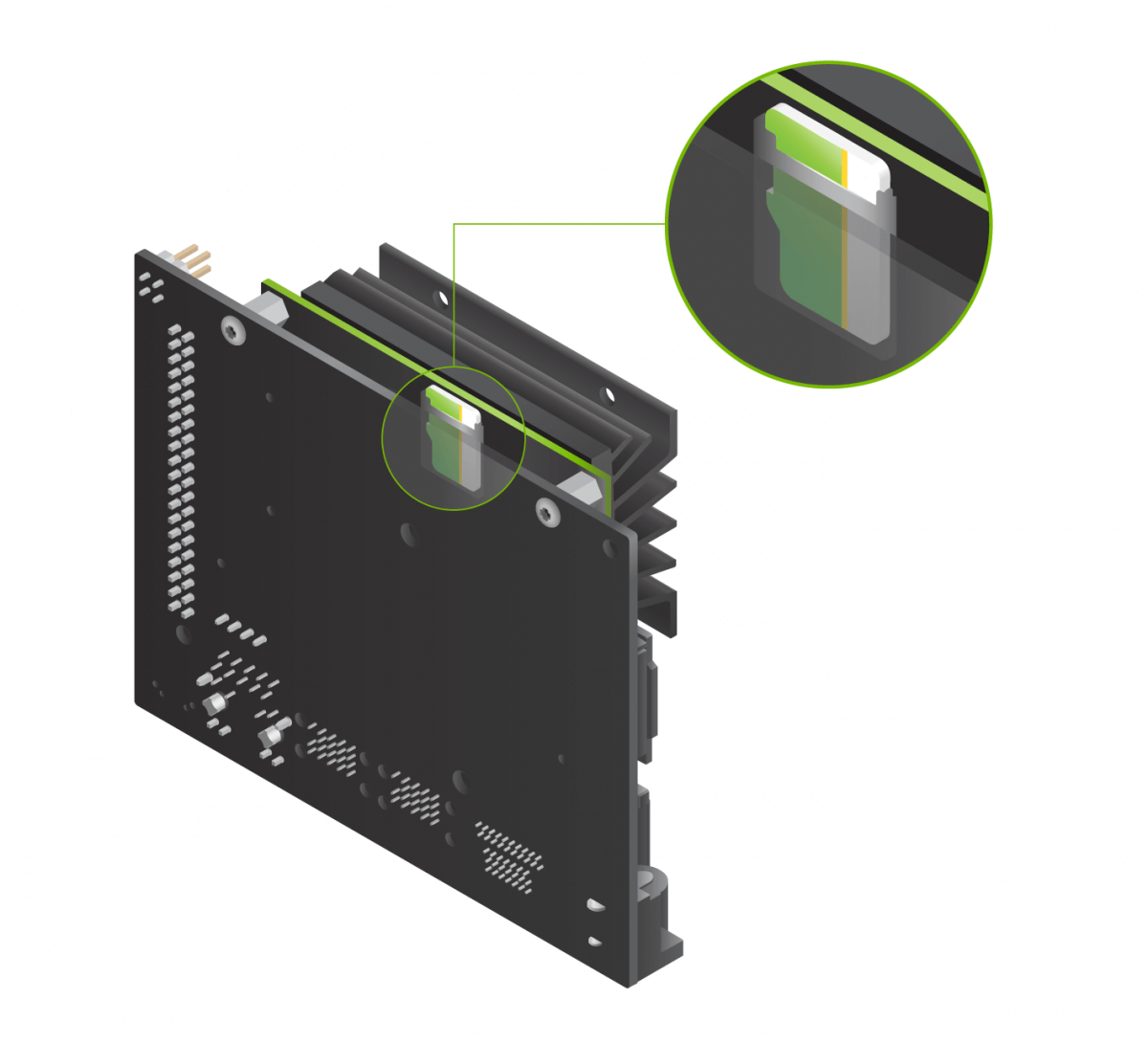

After that is done you need to insert the microSD card into the microSD slot as shown in the following image:

Figure 3: Insert microSD card (Source)

Figure 3: Insert microSD card (Source)

After inserting the microSD card, you can connect the power supply, which will automatically boot up the system.

When you boot the system for the first time, you’ll be taken through some initial setup, including:

- Review and accept NVIDIA Jetson software EULA

- Select system language, keyboard layout, and time zone

- Create username, password, and computer name

- Log in

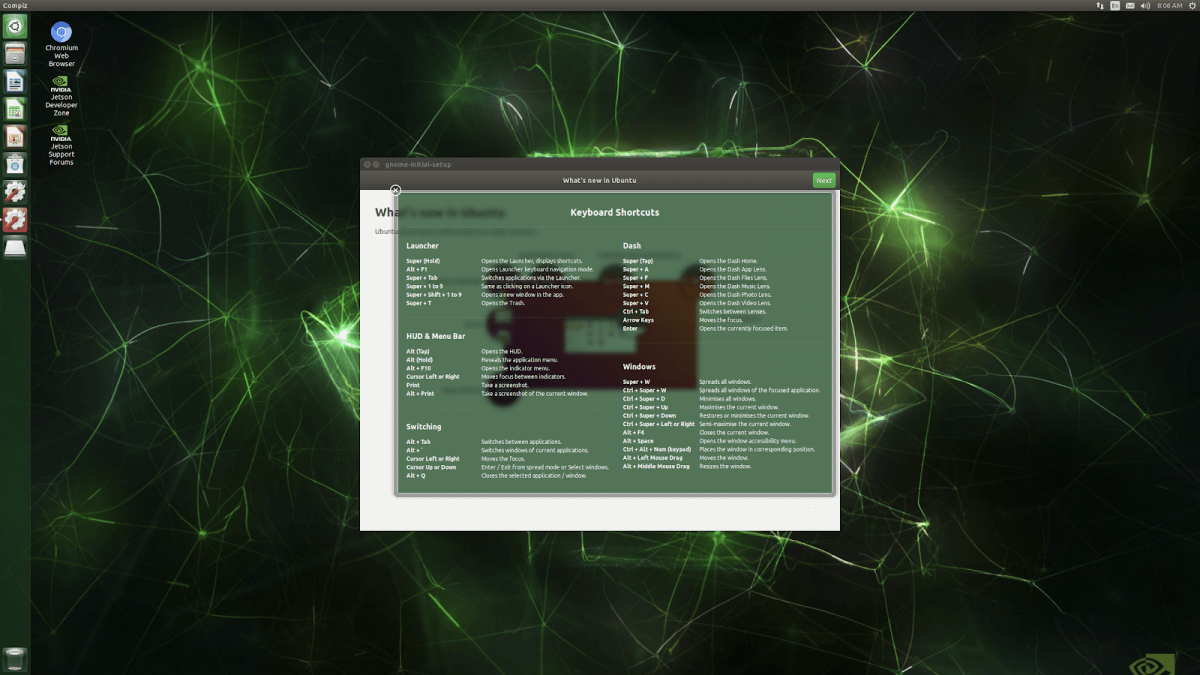

After the initial setup, you should see the following screen:

Figure 4: Desktop

Increasing swap memory (optional)

Recent releases of JetPack enable swap memory as part of the default distribution using the zram module. By default, 2GB of swap memory is enabled. To change the amount of swap memory, you can either edit the /etc/systemd/nvzramconfig.sh file directly, or you can use the resizeSwapMemory repository from JetsonNanoHacks.

git clone https://github.com/JetsonHacksNano/resizeSwapMemory

cd resizeSwapMemory

./setSwapMemorySize.sh -g 4

After executing the above command, you’ll have to restart the Jetson Nano for the changes to take effect.

Installing prerequisites and configuring your Python environment

Now, that the Jetson Nano is ready to go we will go through creating a deep learning environment. We will start of by installing all prerequsites and configuring a Python environment as well as how to code remote using VSCode Remote SSH.

Installing prerequisites

sudo apt-get update

sudo apt-get upgrade

sudo apt-get install git cmake python3-dev nano

sudo apt-get install libhdf5-serial-dev hdf5-tools libhdf5-dev zlib1g-dev zip libjpeg8-dev

Configuring your Python environment

Next we will configure our Python environment. There are three way for configuring the Python environment. 1) pip + virtualenv; 2)Conda (or miniconda)

- pip: a package manager

- virtualenv: an environemnt manage (And required sudo priority to use it) ==> Choose Virtualenv only when you have sudo access to the machine you are working on. It is much easier to setup conda rather than virtualenv for a regular (i.e., non sudo/root) user on a linux/Mac machine.

- conda: a environemnt manager and package installer ==> **Choose Anaconda if you: **Have the time and disk space (a few minutes and 3 GB), and/or Don’t want to install each of the packages you want to use individually.

- conda vs miniconda: Both Anaconda and Miniconda uses Conda as the package manager. The difference among Anaconda and Miniconda is that Miniconda only comes the package management system. So when you install it, there is just the management system and not coming with a bundle of pre-installed packages like Anaconda does. Once Conda is installed, you can then install whatever package you need from scratch along with any desired version of Python. ==> Choose Miniconda if you don’t have time or disk space (about 3 GB) to install over 720+ packages (many of the packages are never used and could be easily installed when needed)

If you want to learn more about their difference, check this article, Anaconda vs. Miniconda vs. Virtualenv

Method 1: pip3 and virtualenv

Install pip:

sudo apt-get install python3-pip

sudo pip3 install -U pip testresources setuptools

For managing virtual environments we’ll be using virtualenv, which can be installed like below:

sudo pip install virtualenv virtualenvwrapper

To get virtualenv to work we need to add the following lines to the ~/.bashrc file:

# virtualenv and virtualenvwrapper

export WORKON_HOME=$HOME/.virtualenvs

export VIRTUALENVWRAPPER_PYTHON=/usr/bin/python3

source /usr/local/bin/virtualenvwrapper.sh

To activate the changes the following command must be executed:

source ~/.bashrc

Now we can create a virtual environment using the mkvirtualenv command.

mkvirtualenv latest_tf -p python3

workon latest_tf

Method 2: Miniconda(not completed)

Step1: Decide what miniconda version to download,

Go to this website, and find the Latest Miniconda Installer Links。

Before that, we need to figure out the system architecture of our machine, for linux, you can do:

jetbot@jetbot-desktop:~$ lscpu

Architecture: aarch64

Byte Order: Little Endian

CPU(s): 4

On-line CPU(s) list: 0-3

Thread(s) per core: 1

Core(s) per socket: 4

Socket(s): 1

Vendor ID: ARM

Model: 1

Model name: Cortex-A57

Stepping: r1p1

CPU max MHz: 1479.0000

CPU min MHz: 102.0000

BogoMIPS: 38.40

L1d cache: 32K

L1i cache: 48K

L2 cache: 2048K

Flags: fp asimd evtstrm aes pmull sha1 sha2 crc32

==> So, we need to download the latest miniconda with aarch64 architecture:

wget https://repo.anaconda.com/miniconda/Miniconda3-latest-Linux-aarch64.sh

sh Miniconda3-latest-Linux-x86_64.sh -b -p $PWD/miniconda3

# -b, --background go to background after startup

# -d, --debug print lots of debugging information

Above command will create “miniconda3” directory. From now on, we will refer the path to “miniconda3” directory as “/PATH/TO”

Activate Conda

-bash-4.2$ source /PATH/TO/miniconda3/bin/activate

-bash-4.2$ export PYTHONNOUSERSITE=true

Create new environment and activate it

-bash-4.2$ conda create -n tf_latest python=3.6.9

-bash-4.2$ conda activate tf_latest

# You can list all discoverable environments with `conda info --envs`.

Verify pip points to correct library.

-bash-4.2$ which pip

Expected Outcome: /PATH/TO/miniconda3/envs/tf_latest/bin/pip

Install latest TensorFlow

-bash-4.2$ pip install tensorflow-gpu==2.3.0

Install latest TensorFlow

-bash-4.2$ pip install tensorflow-gpu==2.3.0

Reference:

- https://deeplearning.lipingyang.org/2018/12/23/anaconda-vs-miniconda-vs-virtualenv/

- Miniconda

- 10 Useful Commands to Collect System and Hardware Information in Linux

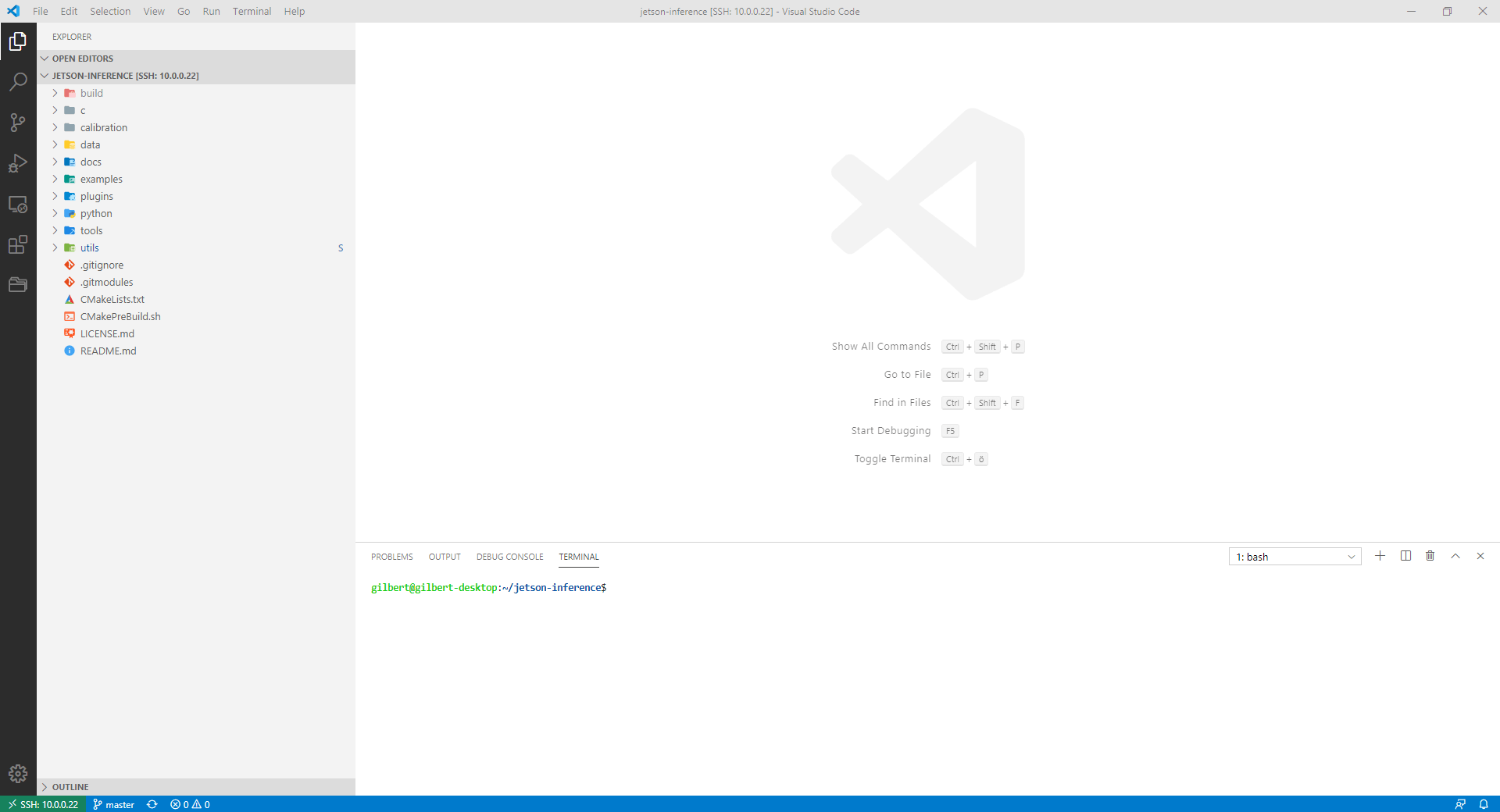

Coding remote with Visual Studio Code (optional)

If you are like me and hate writing long scripts in nano or vim, the VSCode remote development plugin is for you. It allows you to develop remotely inside VSCode by establishing an SSH remote connection.

To use VSCode remote development, you’ll first have to install the remote development plugin. After that, you need to create an SSH-Key on your local machine and then copy it over to the Jetson Nano.

Note: Perform this on your local machine!!

# Create Key

ssh-keygen -t rsa

# Copy key to jetson nano

cat ~/.ssh/id_rsa.pub | ssh user@hostname 'cat >> .ssh/authorized_keys'

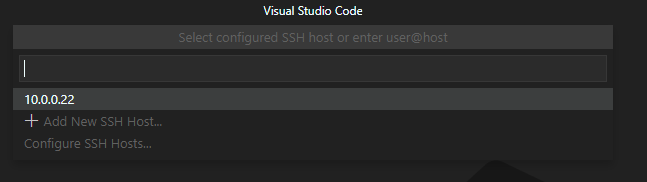

Now you only need to add the SSH Host. Ctrl + Shift + P -> Remote SSH: Connect to Host.

Figure 5: Added new host.

Figure 5: Added new host.

Installing deep learning libraries

Now that we have our development and python environments set up, we can start installing some deep learning libraries. NVIDIA provides a guide on how to install deep learning libraries on the Jetson Nano. I simply put the commands for some installations below.

TensorFlow

Note: TensorFlow 2.0 is problematic, check out this website, https://forums.developer.nvidia.com/t/tensorflow-2-0/72530, and search with “TensorFlow 2.0 can be installed with JetPack4.3 now.”, Also you might need to know the version of your JetPack, so use command jtop to figure that out!

# install prerequisites

$ sudo apt-get install libhdf5-serial-dev hdf5-tools libhdf5-dev zlib1g-dev zip libjpeg8-dev liblapack-dev libblas-dev gfortran

# User virtual env, instead of using root privilege(sudo)

workon latest_tf

# install and upgrade pip3

$ sudo apt-get install python3-pip

$ pip3 install -U pip testresources setuptools==49.6.0

# install the following python packages

$ pip3 install -U numpy==1.16.1 future==0.18.2 mock==3.0.5 h5py==2.10.0 keras_preprocessing==1.1.1 keras_applications==1.0.8 gast==0.2.2 futures protobuf pybind11

$ pip3 install -U numpy grpcio absl-py py-cpuinfo psutil portpicker six mock requests gast h5py astor termcolor protobuf keras-applications keras-preprocessing wrapt google-pasta setuptools testresources

# Note Use this if you got error with h5pf (refers to, https://stackoverflow.com/questions/64942239/unable-to-install-h5py-on-windows-using-pip)

$pip install versioned-hdf5

# to install TensorFlow 1.15 for JetPack 4.4:

$ sudo pip3 install --pre --extra-index-url https://developer.download.nvidia.com/compute/redist/jp/v44 ‘tensorflow<2’

# or install the latest version of TensorFlow (2.3) for JetPack 4.4:

$ sudo pip3 install --pre --extra-index-url https://developer.download.nvidia.com/compute/redist/jp/v44 tensorflow

sudo pip3 install -U pip==20.2.4

sudo pip3 install --pre --extra-index-url https://developer.download.nvidia.com/compute/redist/jp/v43 tensorflow-gpu==1.15.0+nv19.12

Reference:

Keras

# beforehand, install TensorFlow (https://eLinux.org/Jetson_Zoo#TensorFlow)

$ sudo apt-get install -y build-essential libatlas-base-dev gfortran

$ sudo pip3 install keras

PyTorch

Note: Instead of running the following step, I will suggest you following this instruction, because they write a scipt for downing PyTorch and have the correct environment building up for you!

# install OpenBLAS and OpenMPI

$ sudo apt-get install libopenblas-base libopenmpi-dev

# Python 2.7

$ wget https://nvidia.box.com/shared/static/yhlmaie35hu8jv2xzvtxsh0rrpcu97yj.whl -O torch-1.4.0-cp27-cp27mu-linux_aarch64.whl

$ pip install future torch-1.4.0-cp27-cp27mu-linux_aarch64.whl

# Python 3.6

$ sudo apt-get install python3-pip

$ pip3 install Cython

$ wget https://nvidia.box.com/shared/static/9eptse6jyly1ggt9axbja2yrmj6pbarc.whl -O torch-1.6.0-cp36-cp36m-linux_aarch64.whl

$ pip3 install numpy torch-1.6.0-cp36-cp36m-linux_aarch64.whl

Torchvision

Select the version of torchvision to download depending on the version of PyTorch that you have installed:

- PyTorch v1.0 - torchvision v0.2.2

- PyTorch v1.1 - torchvision v0.3.0

- PyTorch v1.2 - torchvision v0.4.0

- PyTorch v1.3 - torchvision v0.4.2

- PyTorch v1.4 - torchvision v0.5.0

- PyTorch v1.5 - torchvision v0.6.0

- PyTorch v1.5.1 - torchvision v0.6.1

- PyTorch v1.6 - torchvision v0.7.0

- PyTorch v1.7 - torchvision v0.8.0

$ sudo apt-get install libjpeg-dev zlib1g-dev

$ git clone --branch <version> https://github.com/pytorch/vision torchvision # see below for version of torchvision to download

$ cd torchvision

$ sudo python3 setup.py install

$ cd ../ # attempting to load torchvision from build dir will result in import error

$ sudo pip install 'pillow<7' # always needed for Python 2.7, not needed torchvision v0.5.0+ with Python 3.6

OpenCV

Installing OpenCV on the Jetson Nano can be a bit more complicated, but frankly, JetsonHacks.com has a great guide.

Compiling and installing Jetson Inference

NVIDIA’s Jetson Inference repository includes lots of great scripts that allow you to perform image classification, object detection, and semantic segmentation on both images and a live video stream. In this article, we will go through how to compile and install the Jetson Inference repository and how to run some of the provided demos. Maybe I will go through the repository in more detail in an upcoming article.

To install Jetson Inference, you need to run the following commands(Or following this instruction, Building the Project from Source ):

# download the repo

$ git clone --recursive https://github.com/dusty-nv/jetson-inference

$ cd jetson-inference

# configure build tree

$ mkdir build

$ cd build

$ cmake ../

# build and install

$ make -j$(nproc)

$ sudo make install

$ sudo ldconfig

Running the Jetson Inference demos

After building the project, you can go to the jetson-inference/build/aarch64/bin directory. Inside you’ll find multiple C++ and Python scripts. Below we’ll go through how to run image classification, object detection, and semantic segmentation.

Image Classification

Inside the folder there are two imagenet examples. One for a image and one for a camera. Both are available in C++ and Python.

- imagenet-console.cpp (C++)

- imagenet-console.py (C++)

- imagenet-camera.cpp (C++)

- imagenet-camera.py (C++)

# C++

$ ./imagenet-console --network=resnet-18 images/jellyfish.jpg output_jellyfish.jpg

# Python

$ ./imagenet-console.py --network=resnet-18 images/jellyfish.jpg output_jellyfish.jpg

Figure 7: Image Classification Example

Figure 7: Image Classification Example

Object Detection

- detectnet-console.cpp (C++)

- detectnet-console.py (Python)

- detectnet-camera.cpp (C++)

- detectnet-camera.py (Python)

# C++

$ ./detectnet-console --network=ssd-mobilenet-v2 images/peds_0.jpg output.jpg # --network flag is optional

# Python

$ ./detectnet-console.py --network=ssd-mobilenet-v2 images/peds_0.jpg output.jpg # --network flag is optional

Figure 8: Object Detection Example

Figure 8: Object Detection Example

Semantic Segmentation

- segnet-console.cpp (C++)

- segnet-console.py (Python)

- segnet-camera.cpp (C++)

- segnet-camera.py (Python)

# C++

$ ./segnet-console --network=fcn-resnet18-cityscapes images/city_0.jpg output.jpg

# Python

$ ./segnet-console.py --network=fcn-resnet18-cityscapes images/city_0.jpg output.jpg

Figure 9: Semantic Segmentation

Figure 9: Semantic Segmentation

JetPack 4.5.1 CUDA and VisionWorks samples

JetPack 4.5.1 includes multiple CUDA and VisionWork demos.

CUDA samples

Installation:

./usr/local/cuda/bin/cuda-install-samples-10.2.sh ~

cd ~/NVIDIA_CUDA-10.2_Samples/

make

cd bin/aarch64/linux/release

After compiling, you can find multiple examples inside the bin/aarch64/linux/release directory.

oceanFFT sample:

./oceanFFT

Figure 10: oceanFFT sample

particles sample:

./particles

Figure 11: particles sample

smokeParticles sample:

./smokeParticles

Figure 12: smoke particles sample

VisionWorks samples

Installation:

./usr/share/visionworks/sources/install-samples.sh

cd ~/VisionWorks-1.6-Samples

make

cd bin/aarch64/linux/release

After compiling, you can find multiple examples inside the bin/aarch64/linux/release directory.

Feature Tracker sample:

./nvx_demo_feature_tracker

Figure 13: Feature tracker sample

Motion Detection sample:

./nvx_demo_motion_estimation

Figure 14: Motion Detection sample

Object Tracker sample:

./nvx_sample_object_tracker_nvxcu

Figure 15: Object Tracker sample

Conclusion

That’s it from this article. In follow-up articles, I will go further into developing Artificial Intelligence on the Jetson Nano, including:

- Deploying custom models on the Jetson Nano

- NVIDIA Jetbot

If you have any questions or just want to chat with me, feel free to leave a comment below or contact me on social media. If you want to get continuous updates about my blog make sure to join my newsletter

Reference:

- [Blog] Getting a Running Start with the NVIDIA Jetson Nano, https://www.farsightsecurity.com/blog/txt-record/nvidia-20200124/ ==> Software Intro

- [Blog] Getting Started with the NVIDIA Jetson Nano Developer Kit, https://dronebotworkshop.com/nvidia-jetson-developer-kit/

- [NVIDIA Site] Getting Started with Jetson Nano Developer Kit, https://developer.nvidia.com/embedded/learn/get-started-jetson-nano-devkit

- [Github] Hello AI World, jetson-inference, https://github.com/dusty-nv/jetson-inference

- [Blog] 深入使用NVIDIA Jetson Inference机器学习项目 - 计算机视觉图片分类任务, https://www.rs-online.com/designspark/nvidia-jetson-inference-1-cn

- [Blog], Getting Started With NVIDIA Jetson Nano Developer Kit, https://gilberttanner.com/blog/jetson-nano-getting-started#overview

- [JetBot] Getting Started, https://jetbot.org/master/getting_started.html

- Yahboom Documentation, http://www.yahboom.net/study/JETBOT

- Embedded Linux Wiki, Jetson/Performance, https://elinux.org/Jetson/Performance ==> (For how to control CPU performance)

- Jetson Nano Wiki – A wiki for the NVIDIA Jetson Nano, https://elinux.org/Jetson_Nano

- How to Establish Remote Desktop Access to Ubuntu From Windows, https://www.makeuseof.com/tag/how-to-establish-simple-remote-desktop-access-between-ubuntu-and-windows/

- How To Set Up a Firewall with UFW on Ubuntu 18.04, https://www.digitalocean.com/community/tutorials/how-to-set-up-a-firewall-with-ufw-on-ubuntu-18-04

- What are the risks of running ‘sudo pip’?, https://stackoverflow.com/questions/21055859/what-are-the-risks-of-running-sudo-pip

Relevant Software:

- VNC viewer, https://www.realvnc.com/en/connect/download/viewer/ ==> This allows you remote control AI Jetbot in your WIndows, and it provides GUI support as well

Appendix A: quick reference for Jetson Inference demo

Here are more information about Camera Streaming and Multimedia, https://github.com/dusty-nv/jetson-inference/blob/master/docs/aux-streaming.md

For this project, you should use command video-viewer csi://0 for live camera.

# ===========================> MIPI CSI cameras Testing

$ video-viewer csi://0 # MIPI CSI camera 0 (substitue other camera numbers)

$ video-viewer csi://0 output.mp4 # save output stream to MP4 file (H.264 by default)

$ video-viewer csi://0 rtp://<remote-ip>:1234 # broadcast output stream over RTP to <remote-ip>

# By default, CSI cameras will be created with a 1280x720 resolution. To specify a different resolution, use the --input-width and input-height options. Note that the specified resolution must match one of the formats supported by the camera.

$ video-viewer --input-width=1920 --input-height=1080 csi://0

# ===========================> V4L2 cameras cameras Testing (e.g., Extra external camera)

# However, if you do have a Logitech camera, e..g, HD Pro Webcam C920, then you can try the following :

# check what devices is available:

ls /dev/video*

# Now the video devices should be listed. If there are any, you'll need to find the drivers if they exist.

$ lsusb

Bus 002 Device 002: ID 0bda:0411 Realtek Semiconductor Corp.

Bus 002 Device 001: ID 1d6b:0003 Linux Foundation 3.0 root hub

Bus 001 Device 003: ID 8087:0a2b Intel Corp.

Bus 001 Device 006: ID 046d:c52b Logitech, Inc. Unifying Receiver

Bus 001 Device 005: ID e0ff:0002

Bus 001 Device 004: ID 046d:082d Logitech, Inc. HD Pro Webcam C920

Bus 001 Device 002: ID 0bda:5411 Realtek Semiconductor Corp.

Bus 001 Device 001: ID 1d6b:0002 Linux Foundation 2.0 root hub

# or use v4l-utils to check the exisitng devices:

$ v4l2-ctl --list-devices

vi-output, imx219 6-0010 (platform:54080000.vi:0):

/dev/video0

HD Pro Webcam C920 (usb-70090000.xusb-2.1):

/dev/video1

$ sudo apt install ffmpeg

$ ffplay /dev/video1

# Take a picture from terminal

$ ffmpeg -f v4l2 -video_size 1280x720 -i /dev/video1 -frames 1 out.jpg

# Parameters chosen based on "How to get camera parameters like resolution" below:

$ ffmpeg -f v4l2 -framerate 30 -video_size 1280x720 -input_format mjpeg -i /dev/video1 -c copy out.mkv

# Then:

$ ffprobe out.mkv

# Read more here, https://askubuntu.com/questions/348838/how-to-check-available-webcams-from-the-command-line

# ===========================> Using the ImageNet Program on Jetson

cd ~/jetson-inference/build/aarch64/bin

./imagenet images/orange_0.jpg images/test/output_0.jpg # (default network is googlenet)

# Using anothher image

./imagenet images/strawberry_0.jpg images/test/output_1.jpg

# Using Different Classification Models

./imagenet --network=resnet-18 images/jellyfish.jpg images/test/output_jellyfish.jpg

# Processing a Video

# Download test video (thanks to jell.yfish.us)

wget https://nvidia.box.com/shared/static/tlswont1jnyu3ix2tbf7utaekpzcx4rc.mkv -O jellyfish.mkv

# C++

./imagenet --network=resnet-18 jellyfish.mkv images/test/jellyfish_resnet18.mkv

# Running the Live Camera Recognition Demo

# The imagenet.cpp / imagenet.py samples that we used previously can also be used for realtime camera streaming. The types of supported cameras include:

# MIPI CSI cameras (csi://0)

# V4L2 cameras (/dev/video0)

# RTP/RTSP streams (rtsp://username:password@ip:port)

# For more information about video streams and protocols, please see the Camera Streaming and Multimedia page, https://github.com/dusty-nv/jetson-inference/blob/master/docs/aux-streaming.md

./imagenet csi://0 # MIPI CSI camera

./imagenet /dev/video0 # V4L2 camera

./imagenet /dev/video0 output.mp4 # save to video file

# ===========================> Locating Objects with DetectNet: Detecting Objects from Images

./detectnet --network=ssd-mobilenet-v2 images/peds_0.jpg images/test/output.jpg # --network flag is optional

./detectnet images/peds_1.jpg images/test/output.jpg

# Python

./detectnet.py --network=ssd-mobilenet-v2 images/peds_0.jpg images/test/output.jpg # --network flag is optional

./detectnet.py images/peds_1.jpg images/test/output.jpg

# Processing a Directory or Sequence of Images: If you have multiple images that you'd like to process at one time, you can launch the detectnet program with the path to a directory that contains images or a wildcard sequence:

./detectnet "images/peds_*.jpg" images/test/peds_output_%i.jpg

# Processing Video Files: You can also process videos from disk. There are some test videos found on your Jetson under /usr/share/visionworks/sources/data

./detectnet /usr/share/visionworks/sources/data/pedestrians.mp4 images/test/pedestrians_ssd.mp4

./detectnet /usr/share/visionworks/sources/data/parking.avi images/test/parking_ssd.avi

# Running the Live Camera Detection Demo

./detectnet csi://0 # MIPI CSI camera

./detectnet /dev/video0 # V4L2 camera

./detectnet /dev/video0 output.mp4 # save to video file

# ======================= Semantic Segmentation with SegNet

./segnet --network=<model> input.jpg output.jpg # overlay segmentation on original

./segnet --network=<model> --alpha=200 input.jpg output.jpg # make the overlay less opaque

./segnet --network=<model> --visualize=mask input.jpg output.jpg # output the solid segmentation mask

# Cityscapes: Let's look at some different scenarios. Here's an example of segmenting an urban street scene with the Cityscapes model:

./segnet --network=fcn-resnet18-cityscapes images/city_0.jpg images/test/output.jpg

# DeepScene: The DeepScene dataset consists of off-road forest trails and vegetation, aiding in path-following for outdoor robots. Here's an example of generating the segmentation overlay and mask by specifying the --visualize argument:

./segnet --network=fcn-resnet18-deepscene images/trail_0.jpg images/test/output_overlay.jpg # overlay

./segnet --network=fcn-resnet18-deepscene --visualize=mask images/trail_0.jpg images/test/output_mask.jpg # mask

# Multi-Human Parsing (MHP): Multi-Human Parsing provides dense labeling of body parts, like arms, legs, head, and different types of clothing. See the handful of test images named humans-*.jpg found under images/ for trying out the MHP model:

./segnet --network=fcn-resnet18-mhp images/humans_0.jpg images/test/output.jpg

# Pascal VOC: Pascal VOC is one of the original datasets used for semantic segmentation, containing various people, animals, vehicles, and household objects. There are some sample images included named object-*.jpg for testing out the Pascal VOC model:

./segnet --network=fcn-resnet18-voc images/object_0.jpg images/test/output.jpg

# SUN RGB-D: The SUN RGB-D dataset provides segmentation ground-truth for many indoor objects and scenes commonly found in office spaces and homes. See the images named room-*.jpg found under the images/ subdirectory for testing out the SUN models:

./segnet --network=fcn-resnet18-sun images/room_0.jpg images/test/output.jpg

# Processing a Directory or Sequence of Images: If you want to process a directory or sequence of images, you can launch the program with the path to the directory that contains images or a wildcard sequence:

./segnet --network=fcn-resnet18-sun "images/room_*.jpg" images/test/room_output_%i.jpg

# *** Running the Live Camera Segmentation Demo***

./segnet --network=<model> csi://0 # MIPI CSI camera

./segnet --network=<model> /dev/video0 # V4L2 camera

./segnet --network=<model> /dev/video0 output.mp4 # save to video file

# Visualization: Displayed in the OpenGL window are the live camera stream overlayed with the segmentation output, alongside the solid segmentation mask for clarity. Here are some examples of it being used with different models that are available to try:

./segnet --network=fcn-resnet18-mhp csi://0

./segnet --network=fcn-resnet18-sun csi://0

./segnet --network=fcn-resnet18-deepscene csi://0

# ===========================> Pose Estimation with PoseNet

# Pose Estimation on Images

./posenet "images/humans_*.jpg" images/test/pose_humans_%i.jpg

# Pose Estimation from Video: To run pose estimation on a live camera stream or video, pass in a device or file path from the Camera Streaming and Multimedia page.

./posenet /dev/video0 # csi://0 if using MIPI CSI camera

./posenet --network=resnet18-hand /dev/video0

# All available model

# 1 "\ZbImage Recognition - all models (2.2 GB)\Zn" off \

# 2 " > AlexNet (244 MB)" off \

# 3 " > GoogleNet (54 MB)" on \

# 4 " > GoogleNet-12 (42 MB)" off \

# 5 " > ResNet-18 (47 MB)" on \

# 6 " > ResNet-50 (102 MB)" off \

# 7 " > ResNet-101 (179 MB)" off \

# 8 " > ResNet-152 (242 MB)" off \

# 9 " > VGG-16 (554 MB)" off \

# 10 " > VGG-19 (575 MB)" off \

# 11 " > Inception-v4 (172 MB)" off \

# 12 "\ZbObject Detection - all models (395 MB)\Zn" off \

# 13 " > SSD-Mobilenet-v1 (27 MB)" off \

# 14 " > SSD-Mobilenet-v2 (68 MB)" on \

# 15 " > SSD-Inception-v2 (100 MB)" off \

# 16 " > PedNet (30 MB)" off \

# 17 " > MultiPed (30 MB)" off \

# 18 " > FaceNet (24 MB)" off \

# 19 " > DetectNet-COCO-Dog (29 MB)" off \

# 20 " > DetectNet-COCO-Bottle (29 MB)" off \

# 21 " > DetectNet-COCO-Chair (29 MB)" off \

# 22 " > DetectNet-COCO-Airplane (29 MB)" off \

# 23 "\ZbMono Depth - all models (146 MB)\Zn" off \

# 24 " > MonoDepth-FCN-Mobilenet (5 MB)" on \

# 25 " > MonoDepth-FCN-ResNet18 (40 MB)" off \

# 26 " > MonoDepth-FCN-ResNet50 (100 MB)" off \

# 27 "\ZbPose Estimation - all models (222 MB)\Zn" off \

# 28 " > Pose-ResNet18-Body (74 MB)" on \

# 29 " > Pose-ResNet18-Hand (74 MB)" on \

# 30 " > Pose-DenseNet121-Body (74 MB)" off \

# 31 "\ZbSemantic Segmentation - all (518 MB)\Zn" off \

# 32 " > FCN-ResNet18-Cityscapes-512x256 (47 MB)" on \

# 33 " > FCN-ResNet18-Cityscapes-1024x512 (47 MB)" on \

# 34 " > FCN-ResNet18-Cityscapes-2048x1024 (47 MB)" off \

# 35 " > FCN-ResNet18-DeepScene-576x320 (47 MB)" on \

# 36 " > FCN-ResNet18-DeepScene-864x480 (47 MB)" off \

# 37 " > FCN-ResNet18-MHP-512x320 (47 MB)" on \

# 38 " > FCN-ResNet18-MHP-640x360 (47 MB)" off \

# 39 " > FCN-ResNet18-Pascal-VOC-320x320 (47 MB)" on \

# 40 " > FCN-ResNet18-Pascal-VOC-512x320 (47 MB)" off \

# 41 " > FCN-ResNet18-SUN-RGBD-512x400 (47 MB)" on \

# 42 " > FCN-ResNet18-SUN-RGBD-640x512 (47 MB)" off \

# 43 "\ZbSemantic Segmentation - legacy (1.4 GB)\Zn" off \

# 44 " > FCN-Alexnet-Cityscapes-SD (235 MB)" off \

# 45 " > FCN-Alexnet-Cityscapes-HD (235 MB)" off \

# 46 " > FCN-Alexnet-Aerial-FPV (7 MB)" off \

# 47 " > FCN-Alexnet-Pascal-VOC (235 MB)" off \

# 48 " > FCN-Alexnet-Synthia-CVPR (235 MB)" off \

# 49 " > FCN-Alexnet-Synthia-Summer-SD (235 MB)" off \

# 50 " > FCN-Alexnet-Synthia-Summer-HD (235 MB)" off \

# 51 "\ZbImage Processing - all models (138 MB)\Zn" off \

# 52 " > Deep-Homography-COCO (137 MB)" off \

# 53 " > Super-Resolution-BSD500 (1 MB)" off )